Marshall is the most well-known off-price store chain in the US. It belongs to the American company TJX Companies. The shop stocks varied collections of name-brand garments, shoes, accessories, furniture, various household items, and beauty items at lower costs. Marshall operates on an off-price retail market, allowing it to take excessive inventory from producers or other dealers and resell it at discounted prices that customers can purchase.

Shoppers can visit these outlets, where they get to choose from designers and branded apparel at below-market prices that a typical department store would offer. Marshalls stands out in the same way as the rest of retailers by providing different merchandise categories: fashion, decoration, and beauty which brings in many customers who are looking for brand talents and unconventional goods at discounted prices.

How to Use Web Scraping For Extracting Marshalls Stores Location Data

Furthermore, the provided store location data can enable businesses to carry out market research as well as competitive analysis. Marshall’s establishments can be analyzed to determine whether the location is oversaturated with retailers, what types of customers access the stores either having their own or competitors’ locations. Market research of this kind provides data to support decisions about the market area to be explored for expansion, the kind of products/services to offer, and the development of prices that allow businesses to reap profits while remaining competitive and dynamic.

A complete list of all Marshall retail locations in the United States, with geocoded addresses, phone numbers, open hours, stock tickers, and more, is available for rapid download.

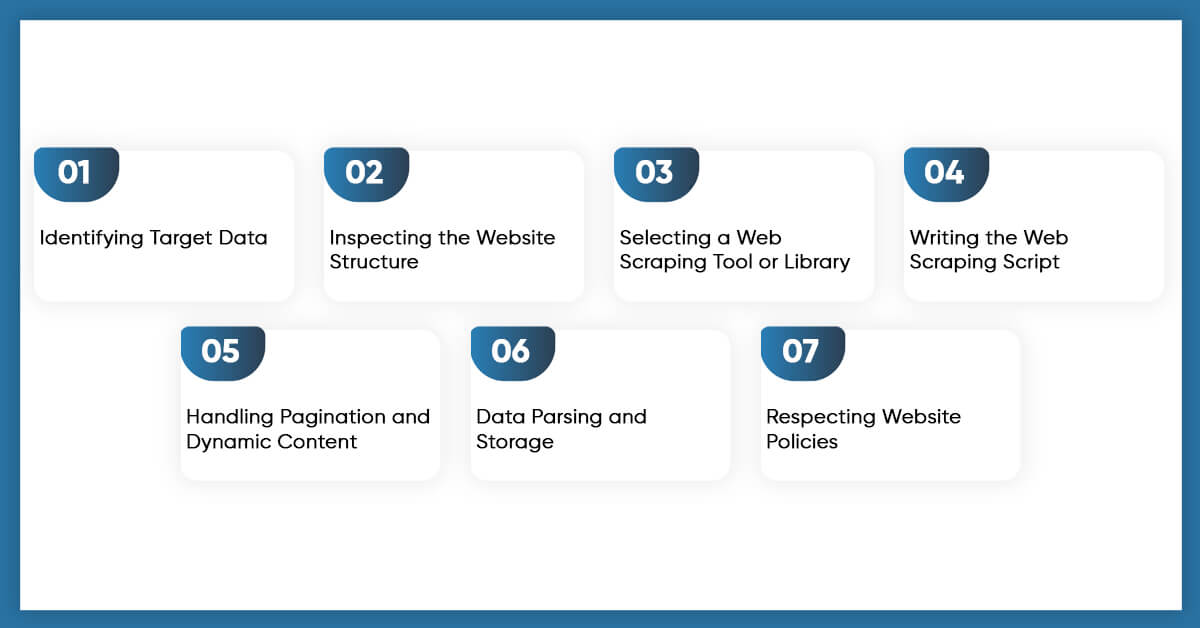

1. Identifying Target Data:

This step helps decide what data needs to be collected. For Marshalls store location data, experts can get things like where the stores are (addresses), which city and state they’re in, their zip codes, phone numbers to contact them, and maybe even when they’re open (store hours).

2. Inspecting the Website Structure:

Websites are made up of a special language called HTML, which is like the blueprint for how the website looks. By checking and analyzing Marshalls’ website’s HTML code, you can see how they have organized all the store information. This helps in analyzing its base to create a successful website. You might find that each store’s details are written inside specific HTML tags, like for a section of the webpage or for a paragraph.

3. Selecting a Web Scraping Tool or Library:

It is important to select the right tool and specific library for the data extraction process of the store datasets from Marshalls’ website. There are different tools available, but popular ones include BeautifulSoup (for Python) or Selenium WebDriver. With these tools, it becomes easier to collect and understand the information on a webpage.

4. Writing the Web Scraping Script:

Now it’s time to create strategies for the data scraping process. Experts write down a series of steps (a script) that tell your chosen tool how to go to Marshalls’ website, find the store details, and bring them back. Marshall store location data scraping can be easily done by writing specific codes in the selected script.

5. Handling Pagination and Dynamic Content:

Sometimes, the store details might be spread across many pages or might only be presented after searching for the next pages for the data scraping process. Your web scraping strategies need to be smart enough to handle these situations. Knowing how to navigate a large data library on the Marshalls Store website.

6. Data Parsing and Storage:

Once you’ve collected all the Marshall store location data, you need to clean the data with advanced tools and techniques. You can easily save the extracted data in a CSV file (like a spreadsheet), a JSON file (another way of organizing data), or even in a database (like a big digital filing cabinet).

7. Respecting Website Policies:

It is important to respect the policies of Marshall’s website to ensure a safe data extraction process. This means not putting in too many queries, not overloading the website and making sure you are utilizing legal and ethical data scraping practices.

Tools to Scrape Marshall Data

There is a diverse pool of tools that can be employed for extracting data using Python. Some popular ones include:

- BeautifulSoup:

Beautiful Soup, a Python package, is designed to parse HTML and XML documents. It constructs a parse tree for analyzed web pages according to defined criteria. This allows users to extract, navigate, search, and alter data from HTML, typically used for web scraping.

- Scrapy:

It is an excellent and user-friendly scraping tool for websites. This lets you make your own web crawlers and then run them; these crawlers quickly scan websites and extract data from them properly.

- Selenium:

The Selenium automation framework is a systematic method of coding that improves code maintenance by arranging code and data independently. Without frameworks, people may keep code and data in the exact location, reducing reusability and readability.

- Requests:

An HTTP ease and convenience for Python in its purest form. It enables you to make an HTTP proposal and handle the response easily; this is why it is quite convenient for multiple purposes.

Scraping the Marshalls Store Locator Data with Python

Let’s start with a practical approach. We will now focus on web scraping Marshalls store locations for just one zip code. This will give you hands-on experience and a clear understanding of the process.

We’ll harness the power of Python, a user-friendly and powerful tool for web scraping. Specifically, we’ll use the Selenium library to effortlessly retrieve the raw HTML source of Marshalls’ store locator page for zip code 30301, corresponding to Atlanta, GA.

Our goal is to use Python and Selenium to retrieve the raw HTML page from Marshalls store locator for specific zip codes or cities across the USA.

### Using Selenium to extract Marshalls store locator's raw html source

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import Select

import time

from bs4 import BeautifulSoup

import numpy as np

import pandas as pd

query_url = 'https://www.marshalls.com/us/store/stores/storeLocator.jsp'

option = webdriver.ChromeOptions()

option.add_argument("--incognito")

chromedriver = r'chromedriver_path'

browser = webdriver.Chrome(chromedriver, options=option)

browser.get(test_url)

text_area = browser.find_element_by_id('store-location-zip')

text_area.send_keys("30301")

element = browser.find_element_by_xpath('//*[@id="findStoresForm"]/div[3]/input[9]')

element.click()

time.sleep(5)

html_source = browser.page_source

browser.close()

Extraction of Marshall’s store information using BeautifulSoup

Using BeautifulSoup to extract Marshall store details involves leveraging this Python library to parse and extract specific information from the HTML source code of Marshall’s store locator pages. BeautifulSoup offers a simple interface for exploring and modifying HTML and XML documents.

Here’s how you can use BeautifulSoup to extract Marshall store details:

After obtaining the raw HTML source, employing a Python library called BeautifulSoup to parse these raw HTML files is preferable.

The extraction of store names:

# extracting Marshalls store names

store_name_list_src = soup.find_all('h3', {'class','address-heading fn org'})

store_name_list = []

for val in store_name_list_src:

try:

store_name_list.append(val.get_text())

except:

pass

store_name_list[:10]

#Output

['East Point',

'College Park',

'Fayetteville',

'Atlanta',

'Decatur',

'Atlanta',

'Atlanta (Brookhaven)',

'McDonough',

'Smyrna',

'Lithonia']

As a first step, we will begin by extracting each shop name and address.

Extracting store addresses of Marshall

You can use a tool called “Inspect” in Chrome to find the tags and class names for each address on a webpage. Just right-click on the address or part you want to know about and choose “Inspect.” This shows you the code that makes up the webpage, where you can find tags and class names that point to the addresses. Understanding this code helps you figure out how to grab the addresses you want when you’re scraping the web page.

# extracting marshalls addresses

addresses_src = soup.find_all('div',{'class', 'adr'})

addresses_src

address_list = []

for val in addresses_src:

address_list.append(val.get_text())

address_list[:10]

# Output

['\n3606 Market Place Blvd.\nEast Point,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30344\n',

'\n 6385 Old National Hwy \nCollege Park,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30349\n',

'\n109 Pavilion Parkway\nFayetteville,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30214\n',

'\n2625 Piedmont Road NE\nAtlanta,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30324\n',

'\n2050 Lawrenceville Hwy\nDecatur,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30033\n',

'\n3232 Peachtree St. N.E. \nAtlanta,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30305\n',

'\n150 Brookhaven Ave\nAtlanta (Brookhaven),\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30319\n',

'\n1930 Jonesboro Road\nMcDonough,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30253\n',

'\n2540 Hargroves Rd.\nSmyrna,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30080\n',

'\n8080 Mall Parkway \nLithonia,\n\t\t\t\t\t\t\t\t\t\t\t\tGA\n30038\n']

Extract phone numbers

We will collect the telephone numbers of the stores to compile the set of data for better data analysis.

# extracting phone numbers

phone_src = soup.find_all('div',{'class', 'tel'})

phone_list = []

for val in phone_src:

phone_list.append(val.get_text())

phone_list[:10]

# Output

['404-344-6703',

'770-996-4028',

'770-719-4699',

'404-233-3848',

'404-636-5732',

'404-365-8155',

'404-848-9447',

'678-583-6122',

'770-436-6061',

'678 526-0662']

The extraction of individual Marshalls shop URLs.

Each local retail location has its URL where further information is available.

# extracting store url for each store

store_list_src = soup.find_all('li',{'class','store-list-item vcard address'})

store_list = []

for val in store_list_src:

try:

store_list.append(val.find('a')['href'])

except:

pass

store_list[:10]

#Output

['https://www.marshalls.com/us/store/stores/East+Point-GA-30344/463/aboutstore',

'https://www.marshalls.com/us/store/stores/College+Park-GA-30349/1207/aboutstore',

'https://www.marshalls.com/us/store/stores/Fayetteville-GA-30214/687/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta-GA-30324/353/aboutstore',

'https://www.marshalls.com/us/store/stores/Decatur-GA-30033/1098/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta-GA-30305/621/aboutstore',

'https://www.marshalls.com/us/store/stores/Atlanta+Brookhaven-GA-30319/49/aboutstore',

'https://www.marshalls.com/us/store/stores/McDonough-GA-30253/859/aboutstore',

'https://www.marshalls.com/us/store/stores/Smyrna-GA-30080/644/aboutstore',

'https://www.marshalls.com/us/store/stores/Lithonia-GA-30038/836/aboutstore']

Geo-encoding

Geo-encoding means getting each store’s exact coordinates (like latitude and longitude). These coordinates are like the store’s address on a map.

Why do we need these coordinates? If we want to show the stores on a map (like on a computer screen or a phone), we need to know exactly where they are. Also, if we want to figure out how far one store is from another or how long it might take to drive from one to the other, we need these coordinates.

To get these coordinates, we can use Google Maps. It’s accurate, but it costs a bit of money. There are free options, but Google Maps might be more precise.

Extracting All Marshalls Retail Locations in the USA

Scaling up to develop a comprehensive crawler capable of extracting all Marshall retail locations across the United States presents various obstacles and concerns.

1. Iterating Through All US Zip Codes:

The initial scraper needs to be modified to iterate through all US zip codes to gather data for all Marshall store locations. This could involve generating a list of zip codes or using a database of US locations to ensure comprehensive coverage.

2. Handling Large Volume of Requests:

With over 100,000 zip codes in the USA, the scraper would need to make a large number of requests to Marshalls.com. However, repeatedly accessing the website from the same IP address can trigger server blocks or CAPTCHA challenges.

3. Dealing with IP Blocks and CAPTCHA Challenges:

As the number of requests increases, Marshalls.com servers may detect unusual activity and block the IP address or present CAPTCHA challenges to verify human users. To circumvent this, techniques such as rotating proxy IP addresses and user agents can make requests appear as if they are coming from different users and devices.

4. Using Rotating Proxy IP Addresses:

Rotating proxy IP addresses involves using a pool of IP addresses from different locations. By rotating between several proxies, the scraper may spread queries across different IP addresses, lowering the possibility of being identified and stopped by Marshalls.com servers.

5. Rotating User Agents:

Similarly, rotating user agents involves periodically changing the identification information sent with each request. This makes the requests appear to be coming from different web browsers or devices, further masking the scraper’s activity.

6. Utilizing CAPTCHA Solving Services:

In cases where CAPTCHA challenges cannot be avoided, external CAPTCHA-solving services like 2captcha or anticaptcha.com can be integrated into the scraper. These services often involve either human workers or advanced algorithms to pass the CAPTCHA codes, allowing the scraper to keep gathering the much-needed data.

Conclusion

Marshalls stores location data and helps businesses to get required data in the proper format. By applying modern tools and strategies, the Marshalls scraper can reduce the risk of blockade or detection and thus ensure the success of the scraping operation across all Marshall stores in the USA. As per analysis, there are 1204 Marshall stores in USA that indicates significant growth in several locations of USA. One of the primary reasons Marshalls appeals to customers is that it continuously refreshes its inventory, allowing shoppers to find new offers each time they visit. To ensure a safe locata data scraping process, rotating proxies for IP addresses can be easily done by using residential proxies. Locations Cloud utilizes advanced practices to extract Marshalls location data by utilzing built-in tools for strategic decision making process.